I want you to pretend that you’re the Editor-in-Chief of a science-focused magazine.

You’re sitting at your desk one day when you receive two emails.

The first email is from someone who just wrote a paper showing that exposing people to money makes them more selfish.

The second email is from someone who just finished some experiments showing that exposing people to money has no impact on their behavior.

Which person would you write back to? Which piece of research would you pick for your magazine?

Probably the first one.

It’s flashier. It’s more interesting. It’ll make for a better issue.

This scenario takes place throughout the scientific world, and it’s a huge problem.

It means that we, the consumers of science, are exposed to a biased sample of the research on any given topic. Sure, there may be 300 published studies showing that people exposed to money are more selfish, but how many studies that showed no-effect are sitting unpublished in filing cabinets around the world? No one knows. And without this info, it’s hard for us to *really* know what the truth is on any given issue.

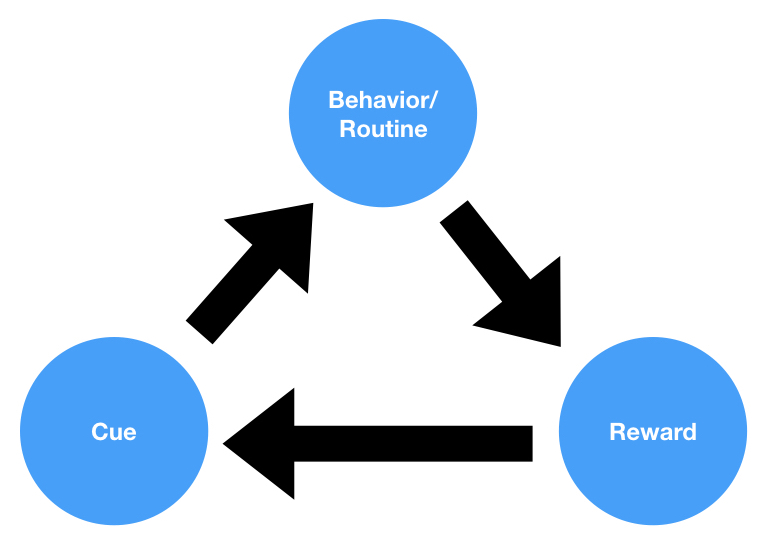

This is all due to publication bias. Journals and magazines don’t like publishing studies with “no effect”.

And it’s a shame. It just means that those of us in the business have to spend a lot of time thinking critically about the research that we encounter. It also means that we have to spend plenty of time talking with researchers in the know–so that we can get an inside view on the state of the research.

There’s also a nifty tool we can use to detect this bias. It’s called a “funnel plot”. But that’s another topic for another time.