Two days ago, The Proceedings of the National Academy of Sciences (PNAS) published a letter that I can only describe as a bomb.

Here it is: No evidence for nudging after adjusting for publication bias

The authors decided to look at a meta-analysis published in PNAS this last December, which stated that “choice architecture interventions overall promote behavior change with a small to medium effect size of Cohen’s d = 0.43…”, and recalculate the meta-analysis’ stated effect sizes so that they would take the observed publication bias into account.

Publication bias refers to the fact that not every experiment gets published. In order for the results of an experiment to make their way into the public sphere, they need to first go through multiple different humans—all with their own vested interests. This means that certain types of results are unlikely to make their way into the published literature. Results that are boring (null results) are unlikely to be turned into a paper and are unlikely to be published by a journal looking for eyeballs. And results that go against a researcher’s pet theory or life’s work are exceedingly unlikely to even make it out of the room.

All of this is to say: science isn’t an objective process. There are humans in the mix with specific incentives and biases. They are the ones who choose what the public and the rest of the scientific community sees.

Publication bias is rampant, and there’s evidence that it’s been increasing over time.

Well, it turns out that the latest victim of widespread publication bias is behavioral economics, specifically its body of research related to nudging.

I’ll start with the conclusion of the new piece first (emphasis mine): “We conclude that the ‘nudge’ literature . . . is characterized by severe publication bias. Contrary to Mertens et al. (3), our Bayesian analysis indicates that, after correcting for this bias, no evidence remains that nudges are effective as tools for behaviour change.”

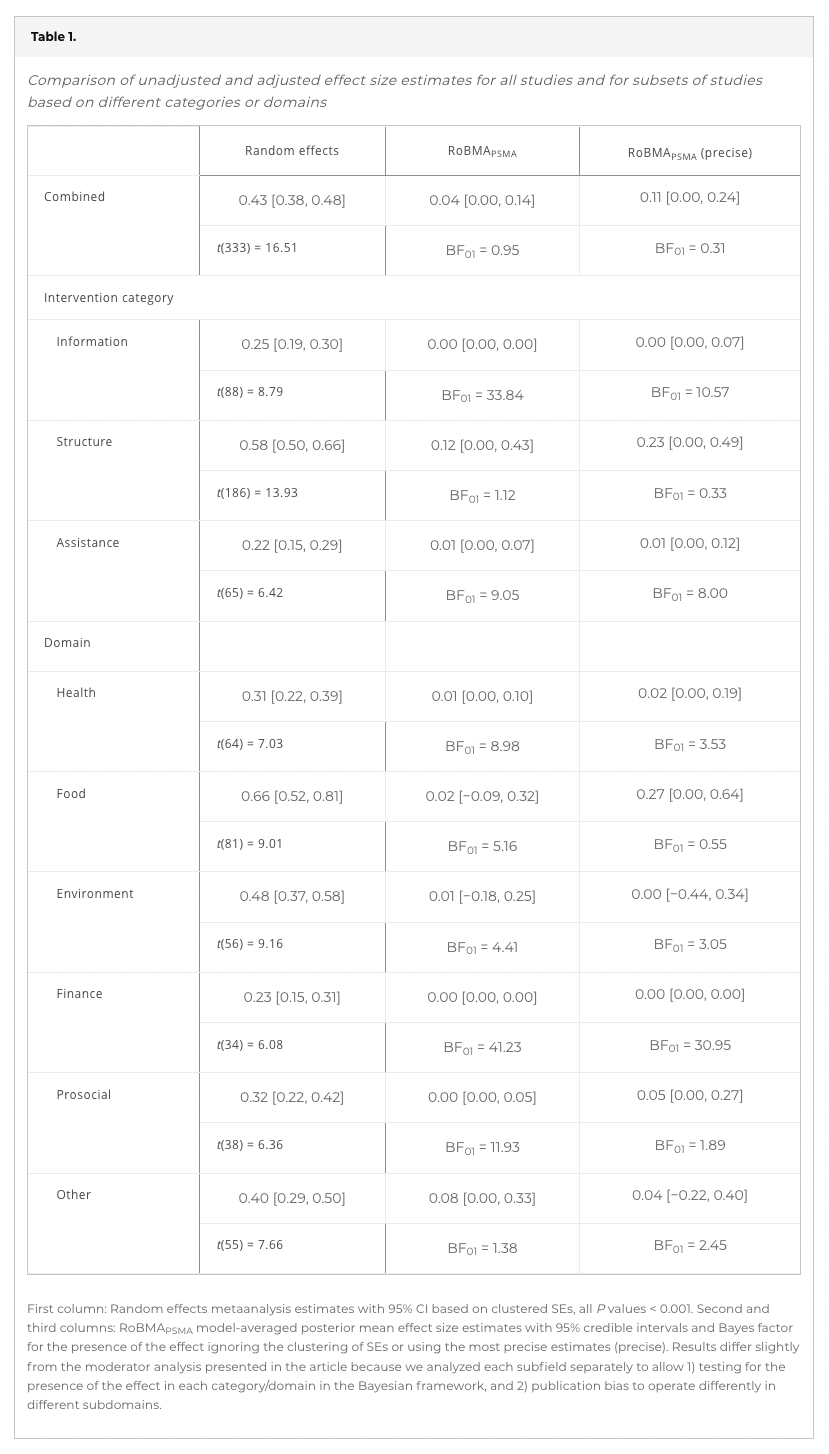

Let’s look at the main table in the paper that summarizes the results:

The first column shows the effect sizes originally reported in the Mertens et al. meta-analysis.

The other two columns show publication-bias-corrected effect sizes. As you can see, the results are quite disappointing. They go from figures like 0.48 to figures like 0.01. While there are some types of nudges with bias-corrected non-zero effect sizes (structure, 0.12), almost all the effect sizes collapse to .02 or less.

Here’s another view of the bias-corrected effect sizes:

When you combine these results with the results I wrote about in this article, it’s hard to take applied behavioral economics and nudging seriously. We now have public results from 126 nudge-unit RCTs showing an effect size 1/6th of what would be expected from the literature, and publication-bias-corrected meta-analysis results showing little to no impact from nudges.*

The fact that the nudging literature has rampant publication bias shouldn’t be surprising. There’s a lot of money and glory in the nudging world. Some of the biggest clients of behavioral economists are corporations and governments, which are more than happy to throw a lot of cash at people promising quick and easy solutions to big problems.

The truth is that changing behavior is hard, and each solution needs to be tailor-made for the specific problem and people in question. Coming in with standardized solutions just doesn’t work.

There is one ray of light in the letter: “all intervention categories and domains apart from “finance” show evidence for heterogeneity, which implies that some nudges might be effective, even when there is evidence against the mean effect.”

This means there might be a few specific nudges that truly work. I’d like to spend some more time digging into the most promising nudges covered in the meta-analysis and their associated trials to see if there are maybe 1 or 2 nudges that should be taken seriously. My hunch, however, is that any potentially impactful nudges are those where the specific nudge chosen perfectly suited the situation and the people in question. This isn’t evidence for that nudge in general, it’s evidence for that nudge in a specific context with specific people.

This is the direction the behavior-change field needs to go: coming up with unique solutions for unique people in specific contexts. Let’s hope that findings like this snap people out of magical, one-size-fits-all thinking.

* The paper that summarizes the real-world RCTs also calls out publication bias as the main culprit: “We show that a large share of the gap is accounted for by publication bias, exacerbated by low statistical power, in the sample of published papers; in contrast, the Nudge Unit studies are well-powered, a hallmark of “at scale” interventions.”