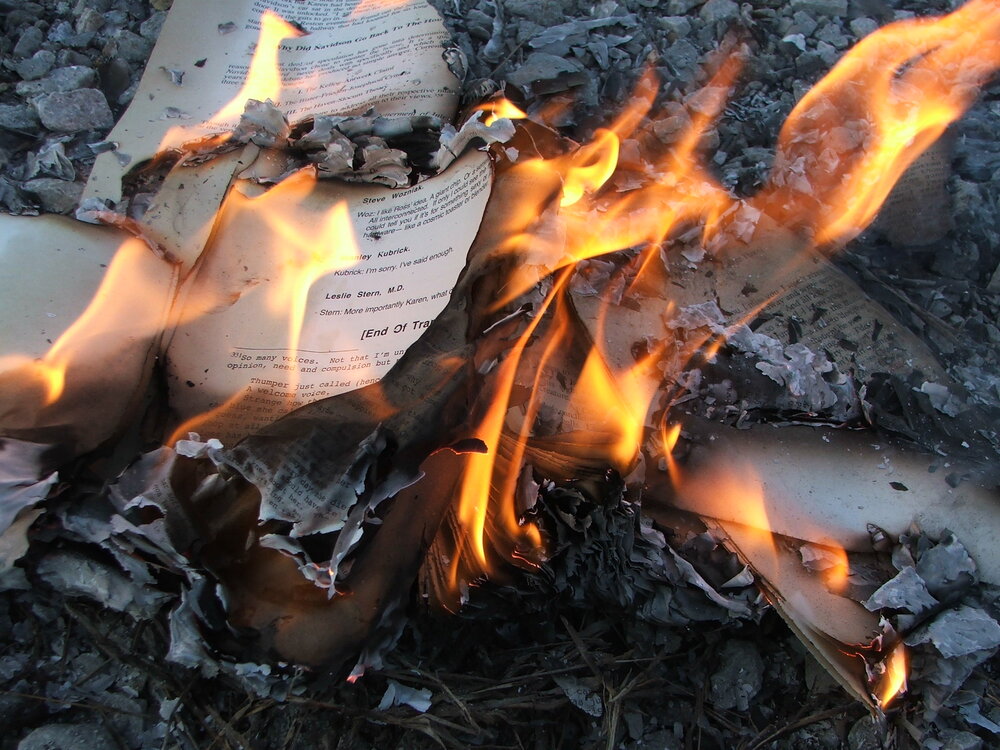

Hebbian Learning is a fundamental principle in the field of neuroscience, cognitive psychology, and behavioral science that postulates how neurons form and strengthen synaptic connections over time based on their correlated activation patterns. This concept is named after Canadian psychologist Donald O. Hebb, who first introduced the idea in his 1949 book, “The Organization of Behavior.” Hebbian Learning is often summarized by the phrase “neurons that fire together, wire together,” emphasizing the idea that synaptic connections between neurons are reinforced and strengthened when they are activated simultaneously or in close succession.

Hebbian Learning is based on the premise that when two neurons, neuron A and neuron B, are repeatedly and persistently activated together, the synaptic strength between them increases. This increase in synaptic strength, or long-term potentiation (LTP), facilitates more efficient communication between the two neurons, ultimately contributing to the formation of new neural pathways, memory consolidation, and learning processes.

The Hebbian Learning principle has several key implications for understanding brain function and cognitive processes, including:

- Neural Plasticity: Hebbian Learning highlights the brain’s capacity for neural plasticity, the ability to change and adapt its structure and function in response to experience, learning, and environmental stimuli.

- Memory Formation: The strengthening of synaptic connections through Hebbian Learning plays a critical role in the formation and consolidation of long-term memories, as well as the reactivation and retrieval of stored information.

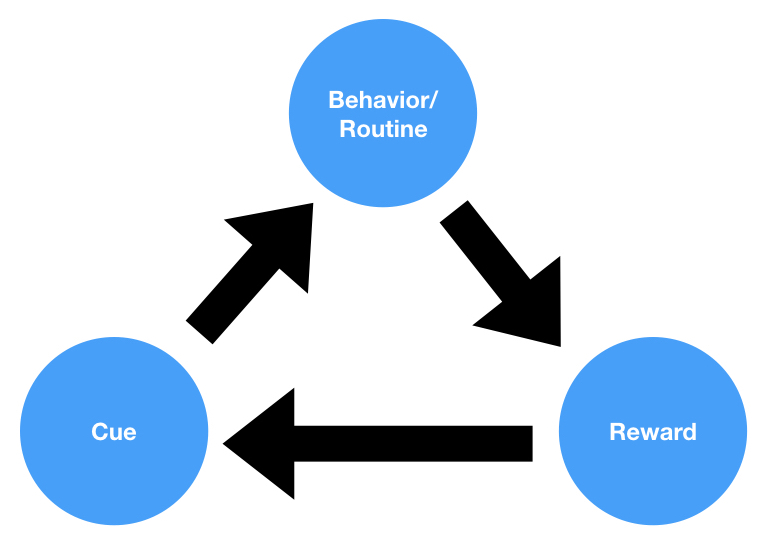

- Associative Learning: Hebbian Learning provides a neural basis for associative learning, the process through which individuals learn to associate two or more stimuli, events, or ideas, leading to the formation of conditioned responses, habits, or mental associations.

Hebbian Learning has also inspired various computational models and artificial neural networks that seek to mimic the brain’s adaptive learning capabilities. These models, often referred to as Hebbian Learning algorithms or Hebbian rules, have applications in machine learning, artificial intelligence, and robotics, particularly in unsupervised learning tasks and pattern recognition problems.

Despite its significance, Hebbian Learning is just one of many factors that contribute to the complex processes of learning, memory formation, and neural plasticity. Other non-Hebbian mechanisms, such as homeostatic plasticity, spike-timing-dependent plasticity (STDP), and neuromodulation, also play crucial roles in shaping brain function and behavior.

Understanding and applying the principles of Hebbian Learning in behavioral science research and practice can offer valuable insights into the neural basis of learning, memory, and cognitive processes, as well as inform the development of innovative computational models and artificial intelligence systems that emulate the adaptive learning capabilities of the human brain.